Search Engine Optimization (SEO) remains a critical driver of business visibility. However, a paradoxical threat has emerged that can cripple your website’s performance more effectively than neglect: Over Optimization.

Table of Contents

For years, the mantra was “more is better”—more keywords, more links, more technical tweaking. Today, Google’s sophisticated AI-driven algorithms, powered by systems like RankBrain and the continuous Helpful Content evolutions, actively penalize sites that try too hard. Over-optimization is the digital equivalent of a salesperson trying desperately to close a deal; it feels unnatural, manipulative, and ultimately drives the “customer” (both the user and the search engine) away.

This comprehensive guide will define what over-optimization looks like in the current search landscape, detail the severe business drawbacks of falling into this trap, provide a diagnostic framework to audit your own site, and offer a step-by-step recovery plan to restore your site’s organic health and trustworthiness.

What is Over-Optimization in 2026?

At its core, website over-optimization is the practice of implementing SEO tactics to such an extreme degree that the website’s primary focus shifts from serving the user to manipulating search engine rankings.

It is the crossing of a fine line between a perfectly tuned engine and one that has been tuned so tightly it blows up on the starting line.

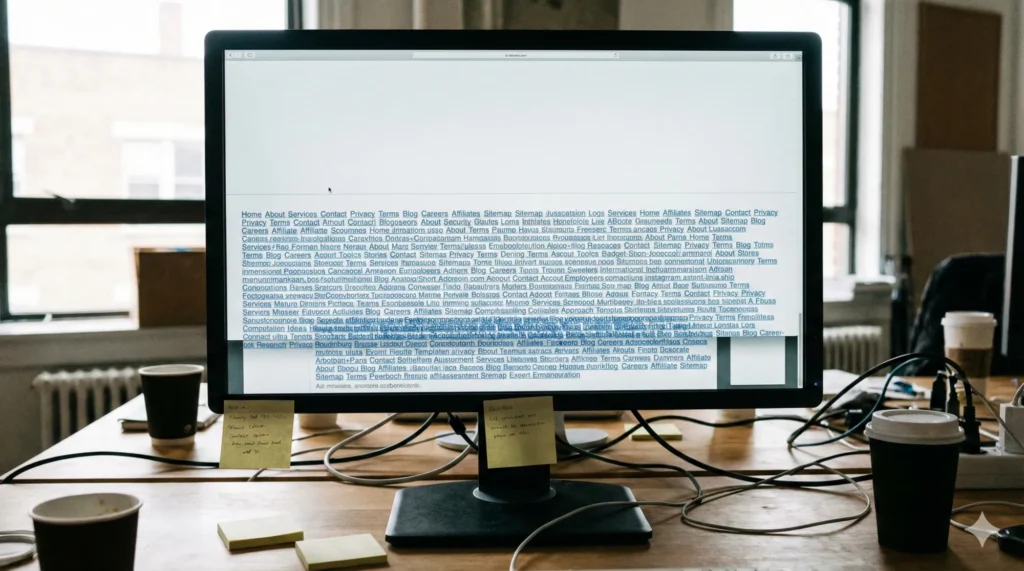

Ten years ago, over-optimization was easy to spot. It was white text on a white background, or repeating the phrase “cheap flights to Vegas” fifty times in a footer. Today, it is far more subtle. It is a symptom of an obsessive-compulsive approach to technical SEO and content structuring that results in a web presence that feels robotic, clinical, and engineered.

Google’s primary goal is to connect users with helpful, authentic, human-centric content (this is the core of their E-E-A-T framework—Experience, Expertise, Authoritativeness, and Trustworthiness). When you over-optimize, you are signaling to Google that you care more about their algorithm than their users. You are trying to “trick” the system into thinking your content is relevant through artificial patterns rather than genuine quality.

Over-optimization is the “Uncanny Valley” of websites. Just as realistic robots that aren’t quite human make people uneasy, websites that are technically perfect but lack human nuance make both users and search bots uneasy.

The Business Drawbacks: Why the “Hard Sell” Fails

Many business owners fall into the over-optimization trap with good intentions. They hire an aggressive SEO agency or buy tools that promise “green scores,” believing that maximizing every metric is the path to growth.

However, the consequences of over-optimization are severe and directly impact the bottom line. It is not just about losing rankings; it is about losing business integrity.

The “Algorithmic Ceiling” and De-indexing

The most immediate drawback is algorithmic suppression. Google’s spam filters and core ranking systems are trained to detect unnatural patterns. If your site triggers these filters, you hit an invisible ceiling. No matter how much new content you publish or how many links you build, your traffic stagnates or slowly bleeds out. In severe cases of manipulative behaviour (like mass-generating thousands of AI pages solely for keyword coverage), parts of your site may be de-indexed entirely, disappearing from Google overnight.

Conversion Rate Poison

Over-optimized content creates a profound disconnect between a brand and its audience. When a website is written primarily for a crawler rather than a person, the prose inevitably feels mechanical, reading more like a tedious legal contract or a generic instruction manual than a helpful resource. Instead of engaging the reader with a compelling narrative or a unique brand voice, the text feels clinical and hollow. This robotic approach strips away the personality of the business, leaving potential clients with a cold, impersonal impression that fails to build the rapport necessary for a successful commercial relationship.

This leads to a phenomenon known as “cognitive friction.” When a user lands on a service page—perhaps searching for a specialized local contractor—they expect a clear, intuitive value proposition that addresses their specific pain points. Instead, they are often met with linguistic gymnastics such as, “If you are looking for the best plumber in Niagara Falls, our Niagara Falls plumbing services are the top rated plumber solutions.” This repetition forces the reader’s brain to work harder just to extract basic information. When language feels this unnatural, humans subconsciously flag it as deceptive or manipulative, creating an immediate psychological barrier.

Ultimately, the most significant business drawback is the massive gap between traffic and revenue. You may succeed in “tricking” a search engine into ranking a page, but you cannot trick a human into reaching for their wallet. High bounce rates are the inevitable result of over-optimization; users quickly realize they are being marketed to by a machine and abandon the site in favour of a competitor who speaks their language. Visibility without conversion is a hollow victory. A business that prioritizes keyword density over clarity will find that while they might capture the click, they consistently fail to capture the customer.

The Real-World Consequences of Over-Optimized Content:

- Immediate Brand Devaluation: Visitors perceive the company as amateur, “cheap,” or out of touch with modern standards.

- Trust Erosion: Unnatural phrasing acts as a red flag, making users question the legitimacy and expertise of the service provider.

- Reduced Retention: Users spend significantly less time on the page, leading to poor “dwell time” metrics that eventually hurt your search rankings.

- Zero Conversion Momentum: Robotic text fails to guide the user toward a Call to Action (CTA), leaving them frustrated and more likely to return to the search results.

Erosion of Brand Trust (E-E-A-T Damage)

Trust is the invisible foundation upon which all sustainable search visibility is built. In an era where AI-generated content and aggressive digital marketing have flooded the internet, search engines have pivoted their algorithms to act as “trust proxies” for the user. Google’s E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness) framework isn’t just a guideline; it is a defensive perimeter designed to filter out the insincere. When a website prioritizes technical manipulation over genuine helpfulness, it creates a “trust deficit” that is nearly impossible to overcome. Because search engines use human-centric data—such as how long a user stays on a page or whether they return to your site—any signal of over-optimization becomes a signal of unreliability.

Beyond the algorithms, trust is the primary driver of customer acquisition and retention. Over-optimization signals a lack of authenticity that savvy modern users detect almost instantly. If your internal linking structure feels contrived or your anchor text is aggressively sales-focused, you aren’t just annoying a bot; you are telling a potential client that your primary interest is a search rank, not their specific problem. A site that feels over-engineered damages the long-term reputation of the brand, as users instinctively associate clinical, “robotic” presentation with a lack of personal care and professional integrity. In 2026, if your website doesn’t feel like it was built by a human who cares about the topic, it will never earn the authority required to dominate the first page.

Wasted Resources on “Vanity Metrics”

This phenomenon is a classic manifestation of the law of diminishing returns. In the early stages of a project, moving a PageSpeed score from 40 to 80 provides a tangible, high-impact boost in user retention and search visibility. However, the labour required to move from a 95 to a perfect 100 is often exponentially greater, requiring deep code refactoring or the removal of valuable features for a marginal gain that no human visitor will ever discern. This is the “Perfect Score Trap,” where technical metrics become the end goal rather than the means to a commercial end.

The true danger for a growing business lies in the opportunity cost. Every hour your team spends obsessing over micro-adjustments to meta-tag character counts is an hour they aren’t spending building interactive sales tools, refining your conversion funnel, or creating the kind of “Pillar Content” that establishes your brand as a Niagara industry leader. By prioritizing these micro-optimizations, businesses inadvertently starve their creative and strategic departments of the oxygen they need to innovate.

In the competitive marketplace, a “perfect” website that offers nothing unique will consistently lose to a “good” website that provides profound value and a seamless path to purchase. Digital success is ultimately measured in revenue growth and client trust, not in the binary satisfaction of a green Lighthouse score.

The Diagnostic Toolkit: 7 Signs Your Site is Over-Optimized

How do you know if you have crossed the line? Over-optimization rarely happens in one day; it is usually a slow accumulation of “best practices” taken too far.

Here are the seven most common symptoms to look for during a site audit in 2026.

Symptom 1: The “Robot Read-Aloud” Test (Keyword Stuffing 2.0)

Classic keyword stuffing is rare, but “semantic stuffing” is common. This happens when writers are forced to include secondary keywords and related questions into paragraphs where they don’t naturally fit.

The Test: Read your top-ranking landing page out loud. If you find yourself stumbling over awkward phrasing, repeating the same concepts using slightly different words, or feeling like you sound like a salesperson trying too hard, your content is over-optimized. Better yet, copy and paste your content into Microsoft Word and have it read it for you … if it sounds terrible to you, it sounds terrible to everyone.

Symptom 2: Aggressive Exact-Match Anchor Text

Anchor text is the clickable text in a hyperlink. In a natural web environment, people link with varied phrases like “click here,” “check out this guide,” or the brand name.+1

The Symptom: If 80% of the links pointing to your “Personal Injury Lawyer” page use the exact anchor text “best personal injury lawyer,” you have an over-optimization problem. This unnatural pattern is an easy red flag for search engine bots.

Symptom 3: Internal Link Vomit

Internal linking is vital for SEO, as it helps bots crawl your site and distributes authority. However, many sites abuse this by turning every other word into a hyperlink.

The Symptom: If a user cannot read a paragraph on your blog without seeing 10 different blue underlined words pointing to unrelated service pages, your internal linking is over-optimized. It looks cluttered, distracts the user, and signals engagement manipulation to Google.

Symptom 4: The “Doorway Page” Strategy (Location/Service Spam)

This is a common tactic for local businesses that backfires. It involves creating hundreds of near-identical pages targeting every possible city variation.

The Example: A website with pages for: “Plumber Akron,” “Plumber Canton,” “Plumber Cuyahoga Falls,” “Plumber Stow,” etc., where the only difference on the page is the city name swapped out dynamically. Google’s Helpful Content system views this as low-value, mass-produced spam designed only for search engines, not users.

Symptom 5: Over-Engineered Structured Data (Schema Abuse)

Schema markup helps search engines understand content. Over-optimization occurs when you mark up content that doesn’t exist or try to manipulate rich snippets incorrectly.

The Symptom: Using “Recipe” schema for a blog post that isn’t a recipe just to get an image in search results, or packing keywords into the description fields of your organization schema. Google will issue manual penalties for misleading structured data.

Symptom 6: The Footer/Sidebar Link Dump

Look at the very bottom of your website.

The Symptom: Does your footer contain a dense block of 50 tiny links to every single sub-service and location page on your site? This archaic tactic, often called a “link dump,” provides zero value to a human user and appears highly manipulative to search engines.

Symptom 7: Obsessive Technical Perfectionism at the Expense of UX

Technical SEO is crucial, but it has diminishing returns.

The Symptom: Stripping out high-quality product images or helpful interactive elements (like video) just to get your mobile page speed score from a 92 to a 98. You have optimized the speed metric, but de-optimized the user experience and likely hurt your conversion rate.

How to Detox and De-Optimize

If you identified with several symptoms in the diagnostic section, do not panic. Over-optimization is reversible. The recovery process involves a strategic shift from focusing on algorithms to focusing on users—often referred to as “de-optimization.”

This process will likely cause temporary ranking fluctuations, but it is necessary for building a sustainable, penalty-proof foundation for the future.

Step 1: The Content Humanity Audit

To truly fix a site that has been over-engineered, you must perform what we call a “Content Humanity Audit.” This process requires you to temporarily step away from your SEO dashboard and review your primary revenue-driving pages with a strictly critical, human eye. While software tools are excellent at providing “green scores” for keyword density and technical metrics, they are fundamentally incapable of detecting the subtle emotional nuances that build trust or the “robotic” friction that drives users away.

Action: Rewrite awkward, keyword-heavy paragraphs. Focus on flow, tone, and clarity. Use synonyms naturally. If a sentence exists solely to house a keyword, delete it. Ask yourself: “Would I proudly show this page to a skeptical CEO?” If not, rewrite it.

Step 2: Dilute and Naturalize Anchor Text

You should implement a “link dilution” strategy: replace aggressive, keyword-heavy internal anchors with descriptive, navigational phrases or simple branded terms. Externally, ensure your backlink profile reflects organic growth rather than manufactured symmetry. By prioritizing a varied and contextual link ecosystem, you move away from transparent ranking tactics and toward a high-authority structure that satisfies both human intuition and search engine requirements.

Action: Audit your internal links. Change a significant percentage of “money keyword” anchors (e.g., “buy cheap insurance”) to softer, navigational anchors (e.g., “view our insurance options,” “learn more,” or the brand name). Ensure links are placed only where they add genuine value and context to the current article.

Step 3: The “Zombieland” Pruning Strategy

Audit your site for “zombie” pages—thin, duplicate content created solely to trap long-tail keyword variations. These “doorway pages” trigger Google’s Helpful Content penalties. Be ruthless: prune underperforming assets and consolidate them into a single, high-authority pillar page. This approach eliminates technical debt, improves crawl efficiency, and signals genuine expertise to both search engines and your valued human users.

Action: Be ruthless. If you have 50 near-identical location pages that receive zero traffic and have high bounce rates, delete them. Redirect (301) their URLs to a single, high-quality, comprehensive “Areas We Serve” page. Quality always beats quantity in 2026.

Step 4: Clean Up the Footer and Sidebars

Stop using footers as robotic link repositories. In 2026, dense, keyword-stuffed link blocks signal manipulation to Google. Audit your site’s navigation; if a link doesn’t serve a human’s journey, remove it. Prioritize an intuitive structure focused on clarity. Clean navigation builds trust, reduces bounce rates, and ensures your brand feels professional rather than over-engineered.

In 2026, dense, keyword-stuffed link blocks signal manipulation to Google.

Action: Remove site-wide “link dumps” in footers and sidebars. Keep navigation concise and helpful. If a link isn’t valuable enough to be in the main menu or within relevant body content, it probably shouldn’t be sitewide in the footer.

Step 5: Review Schema Implementation

Validate your structured data using Google’s Rich Results Test to ensure your schema markup (JSON-LD) accurately reflects visible on-page content. Avoid stuffing metadata with hidden keywords; misleading search engines leads to manual penalties. Integrity in your code is as vital for E-E-A-T as the text itself.

Action: Use Google’s Rich Results Test tool to validate your schema. Ensure you aren’t miscategorizing content or stuffing keywords into schema properties. Only mark up what is visible and true to the user.

Step 6: Shift Focus to E-E-A-T Signals

Shift from micro-managing keyword density to building E-E-A-T. Modern algorithms prioritize transparency over engineering. Enhance author bios, publish clear editorial policies, and showcase genuine testimonials. By making your business’s “who, why, and how” transparent, you earn the credibility keyword stuffing can never provide.

Action: Instead of tweaking meta tags, spend that time improving author bios, adding clear editorial policies, citing credible external sources, and showcasing real customer reviews. Make sure the “who, why, and how” of your business is transparent.

The New Paradigm: From SEO to SXO (Search Experience Optimization)

The antidote to over-optimization is a fundamental shift in mindset.

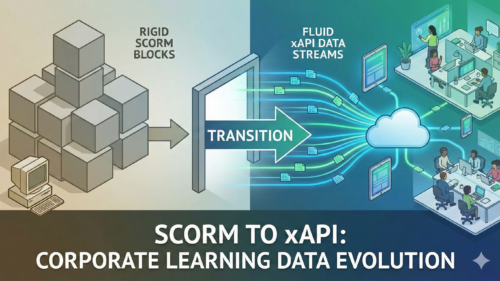

For years, the industry focused on Search Engine Optimization (SEO)—doing things to a website to make a machine like it. The future is Search Experience Optimization (SXO). This philosophy recognizes that Google’s algorithms are now so advanced that the best way to rank number one is just to be the best result for a human.

SXO combines traditional SEO best practices with User Experience (UX) and Conversion Rate Optimization (CRO). In an SXO framework, you don’t ask, “How many times should I use this keyword?” You ask, “Does this page answer the user’s question so thoroughly and clearly that they don’t need to hit the ‘back’ button to look elsewhere?”

If you satisfy the user, you satisfy Google. The two goals are no longer separate.

Conclusion: The Art of Invisible SEO

The ultimate goal of a modern digital strategy is “invisible SEO.”

Like good film editing or sound design, great SEO should be felt but not noticed. The site structure should feel intuitive, not engineered. The content should feel authoritative, not salesy. The links should feel helpful, not forced.

Over-optimization is what happens when you make the tools of the trade visible to the audience. It breaks the immersion.

By auditing your site for the signs of over-engineering and committing to a human-first, E-E-A-T-driven approach, you do more than just avoid Google penalties. You build a resilient digital asset that converts visitors into customers and earns long-term brand loyalty—something no amount of keyword stuffing can ever achieve.

In 2026, the best way to optimize your website is to stop trying so hard to optimize it.

Frequently Asked Questions

What is website over-optimization?

Website over-optimization occurs when SEO tactics—such as keyword repetition, excessive internal linking, or aggressive anchor text—are implemented so intensely that they degrade the user experience. In 2026, Google’s AI-driven algorithms identify these unnatural patterns as manipulative, often resulting in lower rankings or algorithmic penalties.

Can over-optimization cause a Google penalty?

Yes. While “manual actions” are reserved for severe spam, over-optimization typically triggers algorithmic suppression. Google’s Helpful Content and Spam Update systems identify over-engineered sites as “low value,” leading to a significant drop in organic visibility and “dwell time” metrics.

How do I fix a keyword-stuffed website?

To fix keyword stuffing, perform a “Humanity Audit”: read your content aloud and remove any phrasing that feels mechanical. Replace repetitive keywords with natural synonyms and prioritize the flow of information over keyword density. The goal is to satisfy user intent, not an algorithm’s word count.

What is the difference between SEO and SXO?

SEO (Search Engine Optimization) focuses on technical signals to rank higher in search engines. SXO (Search Experience Optimization) combines SEO with User Experience (UX) and Conversion Rate Optimization (CRO), ensuring that once a user clicks through, they find the content helpful, easy to navigate, and persuasive.

Why is exact-match anchor text dangerous in 2026?

Exact-match anchor text (e.g., using only “best Niagara plumber” for every link) creates an unnatural pattern that signals link manipulation. Modern search engines prefer “diluted” or natural anchor text, such as branded names or descriptive phrases, which reflect how real people naturally share information.